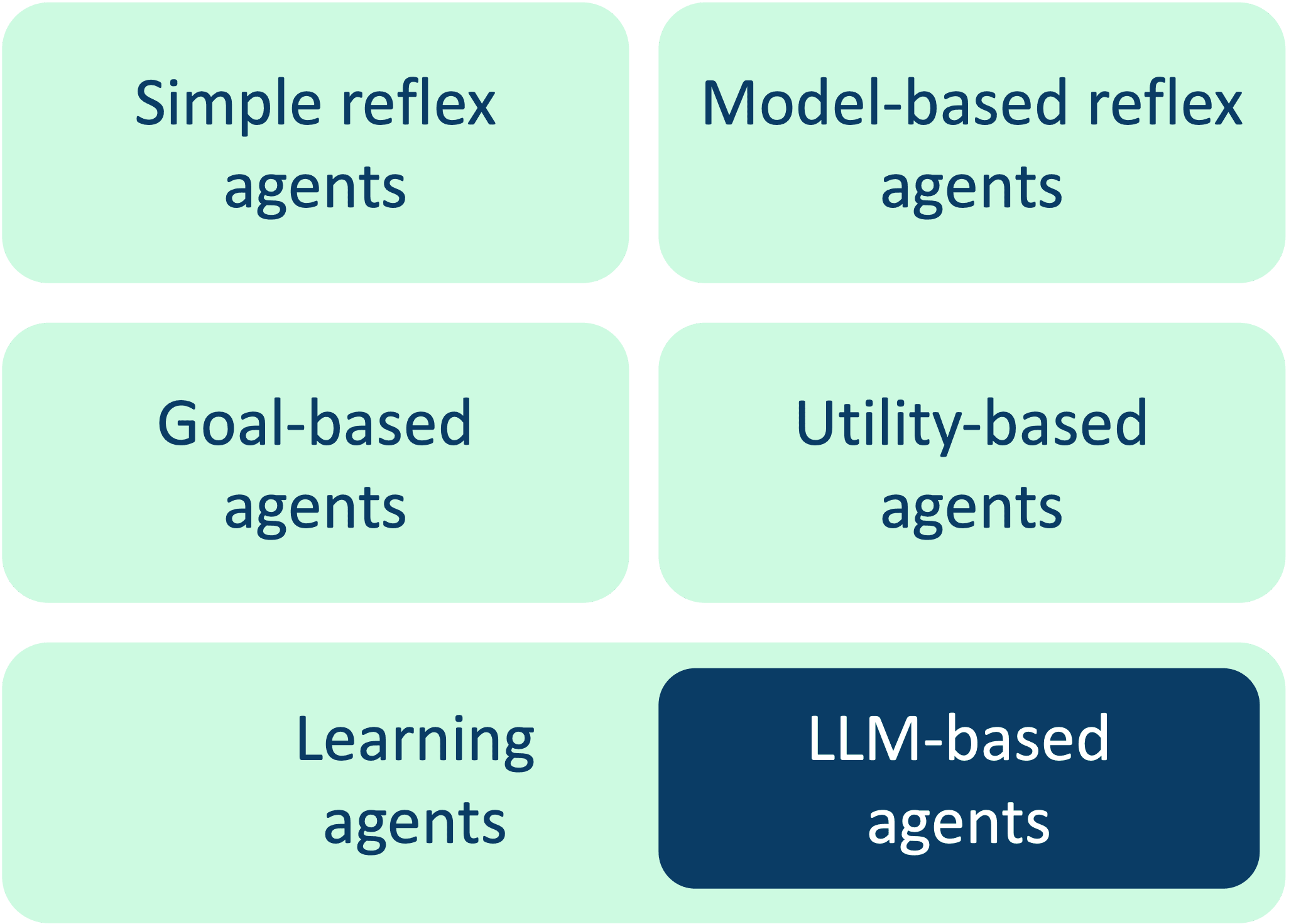

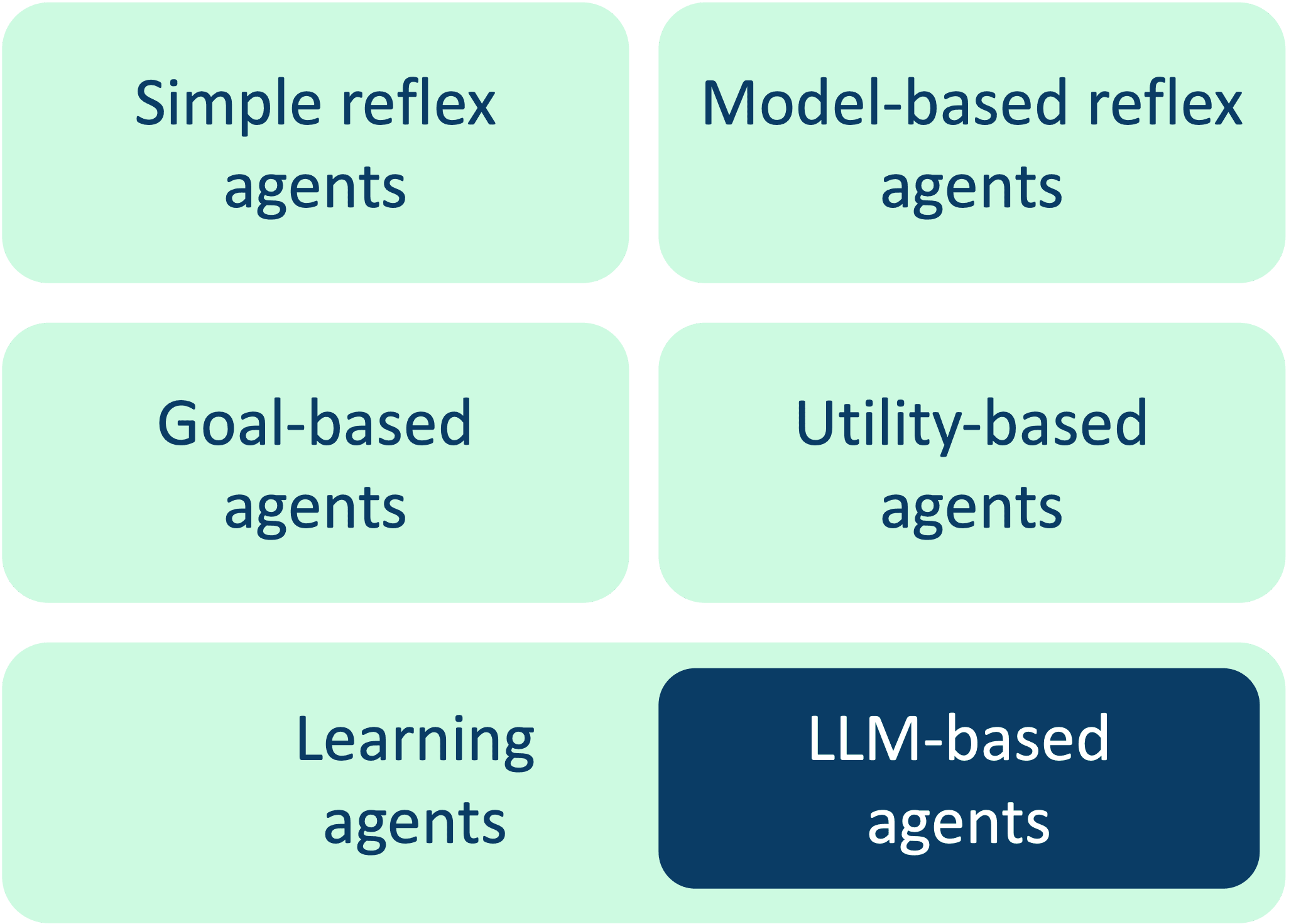

Overview of LLM-based agents, including single-agent and multi-agent systems, core components (planning, memory, tool use), and common coordination and planning patterns.

Overview of LLM-based agents, including single-agent and multi-agent systems, core components (planning, memory, tool use), and common coordination and planning patterns.

An in-depth exploration of preference alignment techniques for LLMs, including Reinforcement Learning from Human Feedback (RLHF) and Direct Preference Optimization (DPO).

The article delves into Retrieval-Augmented Generation (RAG), which integrates retrieval and generative models to enhance GenAI applications efficiently. It highlights the architecture of RAG, utilizing vector databases for data retrieval and response generation.

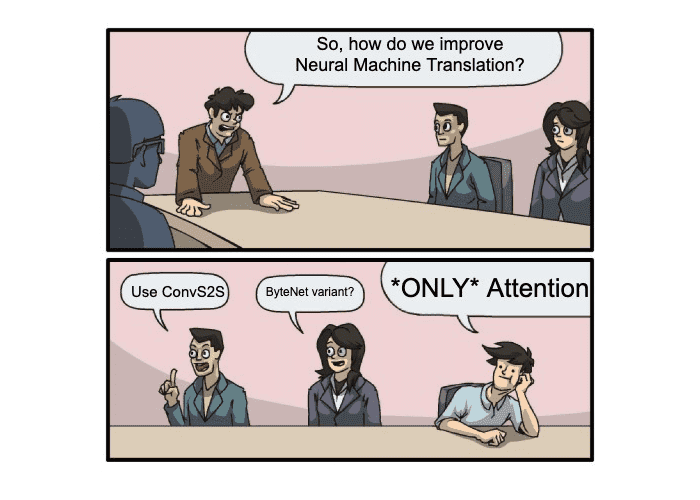

This article explores the evolution from Transformers to Large Language Models (LLMs), detailing the mechanisms of self-attention and multi-head attention, the role of position embeddings, various types of transformer models, and the training and fine-tuning processes of LLMs.

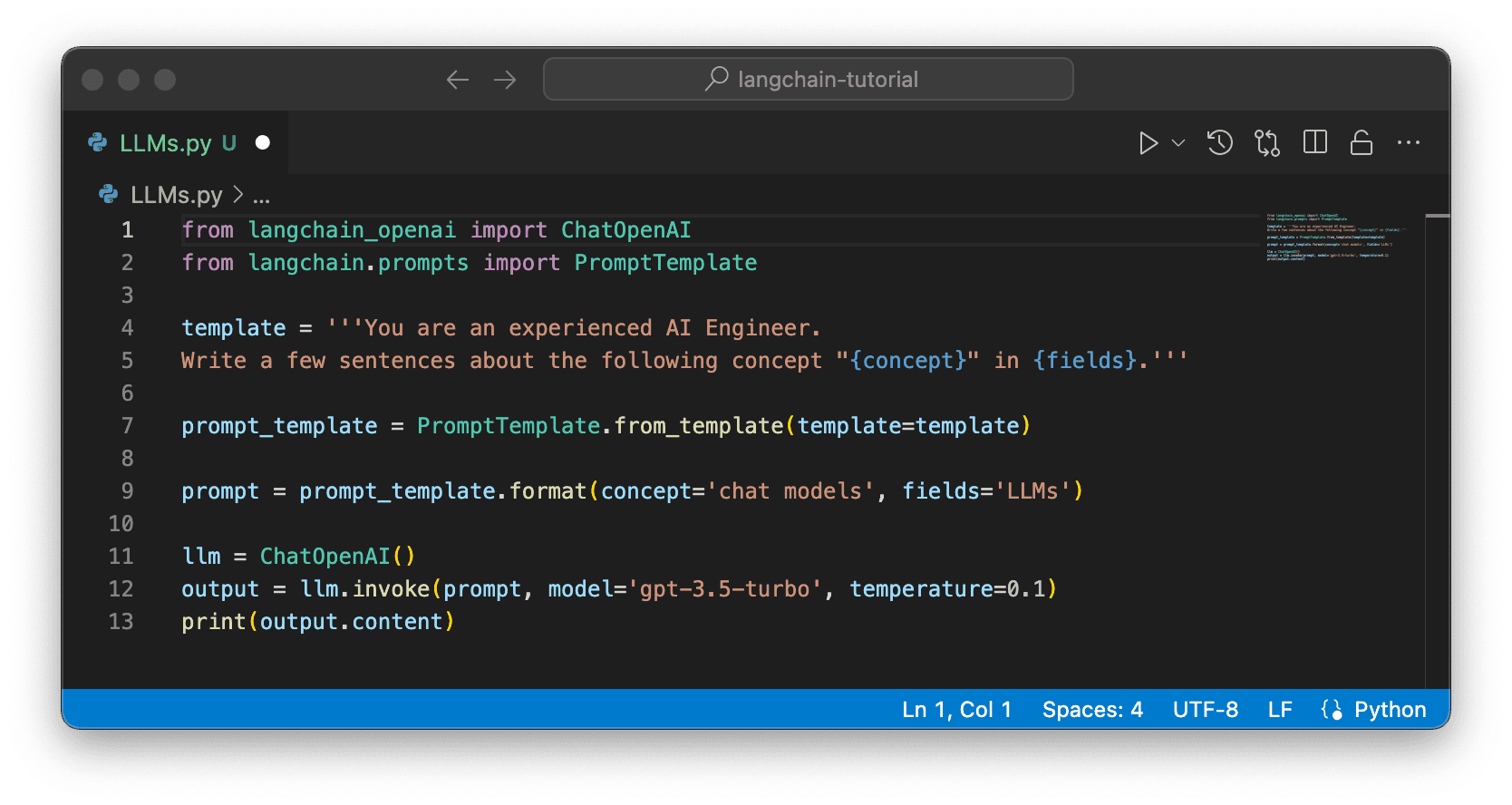

This article provides an overview of the core concepts of Large Language Models (LLMs) in LangChain, including LLM components, prompt templates, indexing, memory, chains, and agents.

This article provides an overview of Large Language Models (LLMs), LLMs evolution and the core concepts of LangChain - an open source framework for building applications based on LLMs.